Artificial Intelligence is often discussed as though it is a single capability that organizations either “have” or “do not have.” In reality, AI represents a spectrum of system designs that have evolved over time, each with very different capabilities, risks, and responsibilities. Treating all AI systems as equivalent leads to confusion, unrealistic expectations, and poor technology decisions.

Across industries, organizations are currently exploring AI in very different ways. Some are modernizing legacy automation. Others are experimenting with AI agents powered by large language models. A smaller number are beginning to explore more autonomous systems that can plan, adapt, and operate with minimal human intervention.

From experience working with organizations at different stages of digital maturity, Techno Consultancy has observed that most challenges with AI adoption do not stem from lack of technology. Instead, they arise from a lack of clarity around what kind of intelligence is actually being deployed and what level of responsibility that intelligence carries.

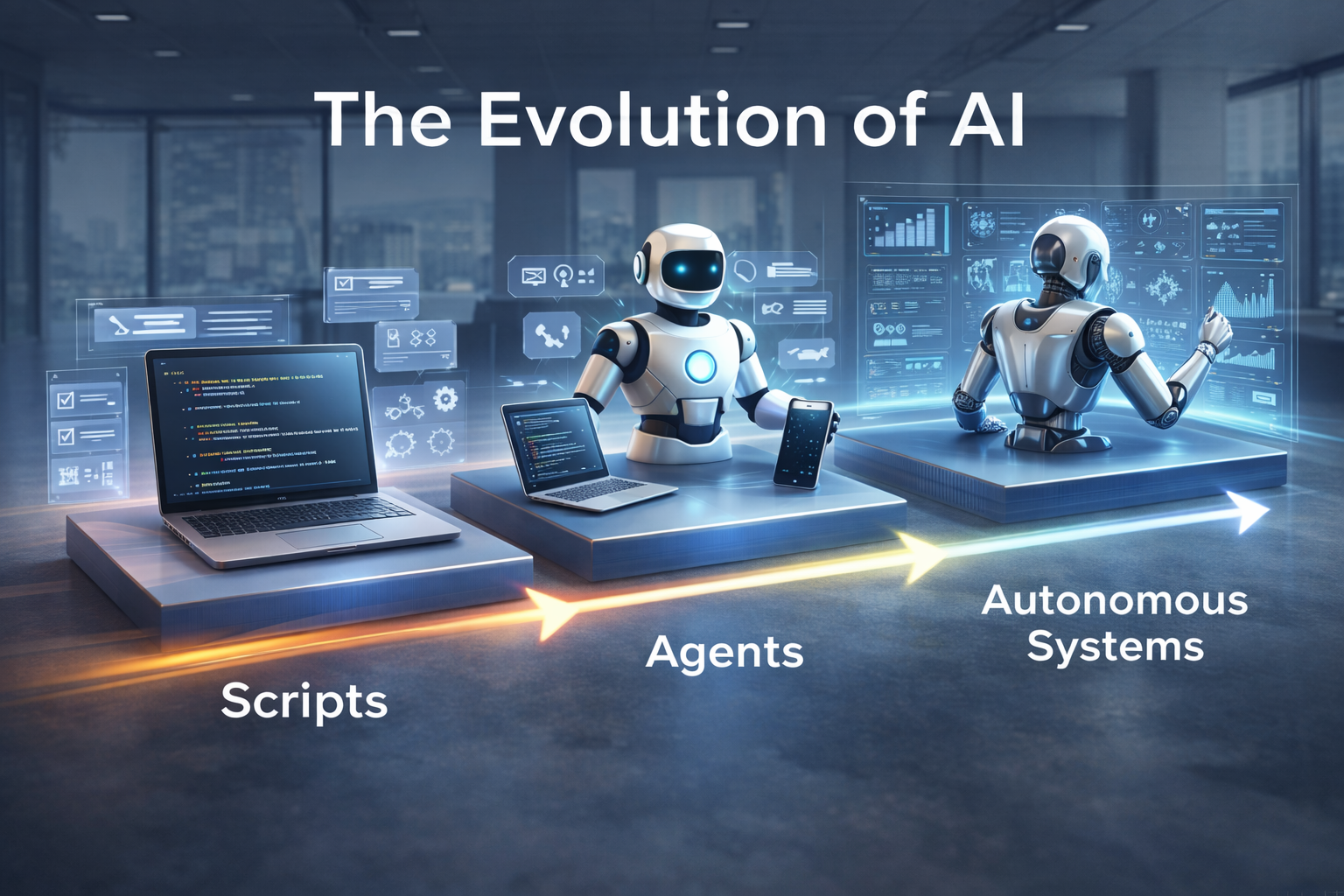

This blog is written to bring structure to that conversation. Rather than viewing AI as a single leap forward, it explains how intelligent systems have evolved through three broad stages:

- Script based systems

- Agent based systems

- Autonomous systems

Each stage builds on the previous one, but each also introduces new implications for design, governance, and accountability. Understanding this evolution helps leaders make informed choices about where AI fits into their organization today, and where it may fit in the future.

How Software Systems Evolved from Rule Execution to Context Aware Decision Making

Scripts represent the earliest stage of intelligent automation and remain one of the most widely used forms of software logic today. At their core, scripts are collections of predefined rules written explicitly by humans. They follow deterministic logic such as “if this condition occurs, perform this action.”

This approach has several advantages. Script based systems are predictable, easy to test, and relatively simple to govern. They work exceptionally well in stable environments where inputs and outcomes are clearly defined. For decades, scripts have powered workflows such as scheduled data processing, system alerts, form validations, and basic chatbot interactions.

However, scripts are inherently limited by the assumptions they encode. They cannot understand intent, interpret nuance, or adapt when scenarios fall outside predefined rules. Every new condition must be anticipated and manually programmed. As systems grow more complex, this approach becomes increasingly fragile.

In practice, many organizations attempt to stretch script based automation to cover scenarios it was never designed for. The result is often a patchwork of rules that are difficult to maintain and prone to failure when real world variability is introduced.

Scripts are effective at executing instructions, but they do not exhibit intelligence. They do not decide, interpret, or learn. They simply follow orders.

Why Rule Based Systems Reached Their Limits

As digital systems became more user facing and interactive, the limitations of scripts became more apparent. Human behaviour is rarely predictable. Users phrase questions differently, provide incomplete information, and expect systems to respond intelligently rather than mechanically.

In areas such as education, customer experience, and enterprise knowledge management, rigid automation struggled to deliver acceptable outcomes. The cost of continuously updating scripts to handle edge cases grew rapidly, while user satisfaction stagnated.

This created a clear need for systems that could operate with greater flexibility and contextual awareness. Organizations required software that could make decisions within defined boundaries rather than blindly execute rules. This need gave rise to AI agents.

Agents: Introducing Goals, Context, and Choice

AI agents represent a fundamental shift in how software systems behave. Instead of being defined solely by rules, agents are designed around goals. An agent’s role is not to execute a specific instruction, but to determine the most appropriate action to achieve an objective.

This introduces decision making into software behaviour.

An agent observes inputs, interpret meaning, evaluates available options, and selects an action based on context. It may respond directly, ask clarifying questions, retrieve information, combine sources, or escalate to a human when necessary.

This ability to choose between multiple actions allows agents to handle ambiguity far more effectively than scripts. They are not limited to a single path. They adapt their behaviours based on the situation.

What Makes Agents Feel “Intelligent”

Agents feel intelligent because they operate closer to how humans reason. They do not simply check conditions. They evaluate intent.

For example, when a user asks a question, an agent can determine whether:

- The question is conceptual or factual

- The user needs a high-level explanation or a detailed walkthrough

- Additional clarification is required

- The request falls outside acceptable boundaries

This makes agents particularly effective in domains where understanding and communication are critical.

From applied implementations, including those observed by Techno Consultancy across education and enterprise environments, agents often represent the point where AI begins delivering visible, user-facing value. They reduce friction, improve response quality, and handle variation without constant manual intervention.

The Boundaries of Agent-Based Systems

Despite their flexibility, agents are not autonomous. Their objectives, permissions, and constraints are defined by humans. They do not decide what outcomes matter. They decide how to pursue predefined outcomes.

This distinction is important. Agents still require strong governance. Because they make choices, organizations must clearly define:

- What data the agent can access

- What actions it is allowed to take

- When it must escalate to a human

Without these boundaries, agent behaviour can drift in undesirable directions.

Agents represent assistive intelligence. They extend human capability, but responsibility remains firmly with the organization deploying them.

From Agents to Autonomous Systems

Autonomous systems represent the next stage in AI evolution. While agents respond to situations and execute tasks toward a goal, autonomous systems are designed to manage objectives continuously over time.

An autonomous system does not wait for explicit prompts in the traditional sense. It monitors its environment, evaluates performance, and determines when action is required, all within predefined boundaries.

Agents are reactive. Autonomous systems are proactive. This shift is subtle but significant.

Core Capabilities That Define Autonomous Systems

Several characteristics distinguish autonomous systems from agents.

- Autonomous systems maintain long-term awareness. They analyse trends and patterns across time rather than focusing on individual interactions.

- Second, they engage in multi-step planning. Instead of selecting a single action, they determine sequences of actions and revise plans as conditions change.

- They incorporate feedback loops. Outcomes influence future behaviours, allowing the system to improve effectiveness over time.

- Finally, autonomous systems operate with reduced dependency on direct human input. Humans define objectives, constraints, and safeguards, but the system manages execution independently.

These capabilities enable powerful outcomes, but they also raise the level of responsibility significantly.

Why Autonomy Changes the Risk Profile

As AI systems become more autonomous, the impact of their decisions expands.

A script failure affects a task. An agent failure affects a workflow. An autonomous system failure affects outcomes at scale.

This is why autonomy requires a fundamentally different approach to governance. Transparency, auditability, and escalation mechanisms are no longer optional. They are essential.

From a practical standpoint, we at Techno Consultancy have observed that many organizations are drawn to autonomy for its efficiency, but underestimate the operational discipline required to manage it responsibly.

The Role of Human Oversight in Autonomous Systems

Autonomy does not mean absence of humans. It means changing where humans intervene.

In many successful deployments, autonomous systems operate within hybrid models. The system monitors, analyses, and recommends actions, while humans retain approval authority for high-impact decisions.

This approach allows organizations to benefit from automation and optimization without relinquishing accountability.

In regulated or high-risk environments, this balance is particularly important. Full autonomy is rarely the starting point. It is something that is earned gradually as trust, monitoring, and governance mature.

Choosing the Right Level of Intelligence

One of the most common mistakes organizations make is assuming that more intelligence automatically leads to better outcomes. In reality, the appropriate level of intelligence depends on context.

Scripts remain ideal for predictable, repeatable processes. Agents excel where interpretation and flexibility are required. Autonomous systems are valuable where continuous optimization delivers measurable benefit.

Maturity in AI adoption is not about deploying the most advanced systems possible. It is about aligning intelligence with real-world needs, risk tolerance, and organizational readiness.

Conclusion

The evolution from scripts to agents to autonomous systems illustrates how AI has progressed from execution to decision-making and, ultimately, toward outcome management.

Each stage plays a distinct role:

- Scripts provide reliability and control

- Agents provide adaptability and contextual reasoning

- Autonomous systems provide long-term optimization

Organizations that succeed with AI are those that understand this continuum and apply intelligence deliberately rather than indiscriminately. They resist hype-driven adoption and focus instead on alignment between capability, governance, and purpose.

From an applied perspective, including the work Techno Consultancy engages in across different domains, the most sustainable AI strategies are built on clarity rather than novelty. Understanding where a system sits on this evolutionary path is the foundation for responsible, effective, and future-ready AI adoption.

AI is not a destination to reach. It is a capability to be applied thoughtfully, one stage at a time.

Ready to Transform Your Business?

Partner with Techno Consultancy to implement advanced solutions tailored to your organization's unique needs.

Get in Touch